In 2025, our world runs on a resource more valuable than oil and more strategic than gold. It’s invisible, microscopic, and yet it powers everything from our smartphones and cars to global financial markets and advanced artificial intelligence. We’re talking about chip technology. These tiny, intricate slices of silicon are the silent giants driving the modern digital empire, and the United States is in a full-sprint race to define their future.

The past few years have been a wake-up call for the U.S. chip industry. We’ve moved from simply enjoying the benefits of chip technology to recognizing it as a critical pillar of national security, economic stability, and future innovation. From silicon wafers in massive fabrication plants to the mind-bending potential of AI chips USA-based companies are designing, the evolution of chip technology is rewriting the global tech map. This article explores this revolution, from its silicon origins to its quantum future.

Table of Contents

What Is Chip Technology?

At its simplest, chip technology refers to the design, manufacturing, and application of integrated circuits (ICs), also known as microchips or semiconductors. Think of a chip as the “brain” of every electronic device. It’s a tiny, complex set of electronic circuits etched onto a small, flat piece of semiconductor material, usually silicon.

This “brain” is composed of billions of microscopic switches called transistors, which can turn on or off (a ‘1’ or a ‘0’) to process data and execute commands. The magic of chip technology is the ability to shrink these transistors to an atomic scale, packing more power into a smaller space every year. This is the hardware that makes all modern software—from your phone’s operating system to advanced AI models—possible.

The Evolution of Chip Technology: From Silicon to Supercomputers

The history of chip technology is defined by one relentless guiding principle: Moore’s Law. Coined in 1965 by Intel co-founder Gordon Moore, this observation predicted that the number of transistors on a microchip would double approximately every two years, while the cost would decrease.

For over 50 years, this law held true. It’s the reason your smartphone today has thousands of times more computing power than the room-sized computers that sent men to the moon.

- 1950s-1960s: The first integrated circuits are created by U.S. pioneers Jack Kilby and Robert Noyce, laying the foundation for all modern electronics.

- 1970s-1990s: The rise of the microprocessor (like the Intel 4004) puts a “computer on a chip.” This fuels the PC revolution, dominated by U.S. chip industry giants.

- 2000s-2010s: The “fabless” model emerges. U.S. companies like NVIDIA, AMD, and Qualcomm focus on high-level design, while specialized foundries in Asia (like TSMC in Taiwan) handle the mass-scale manufacturing.

- 2020s: Moore’s Law begins to slow down. As transistors approach the size of a few atoms, the physical limits of silicon become a barrier. This slowing has forced a new wave of semiconductor innovation. The industry is pivoting from making smaller chips to making smarter chips through new designs, materials, and specialized chip technology.

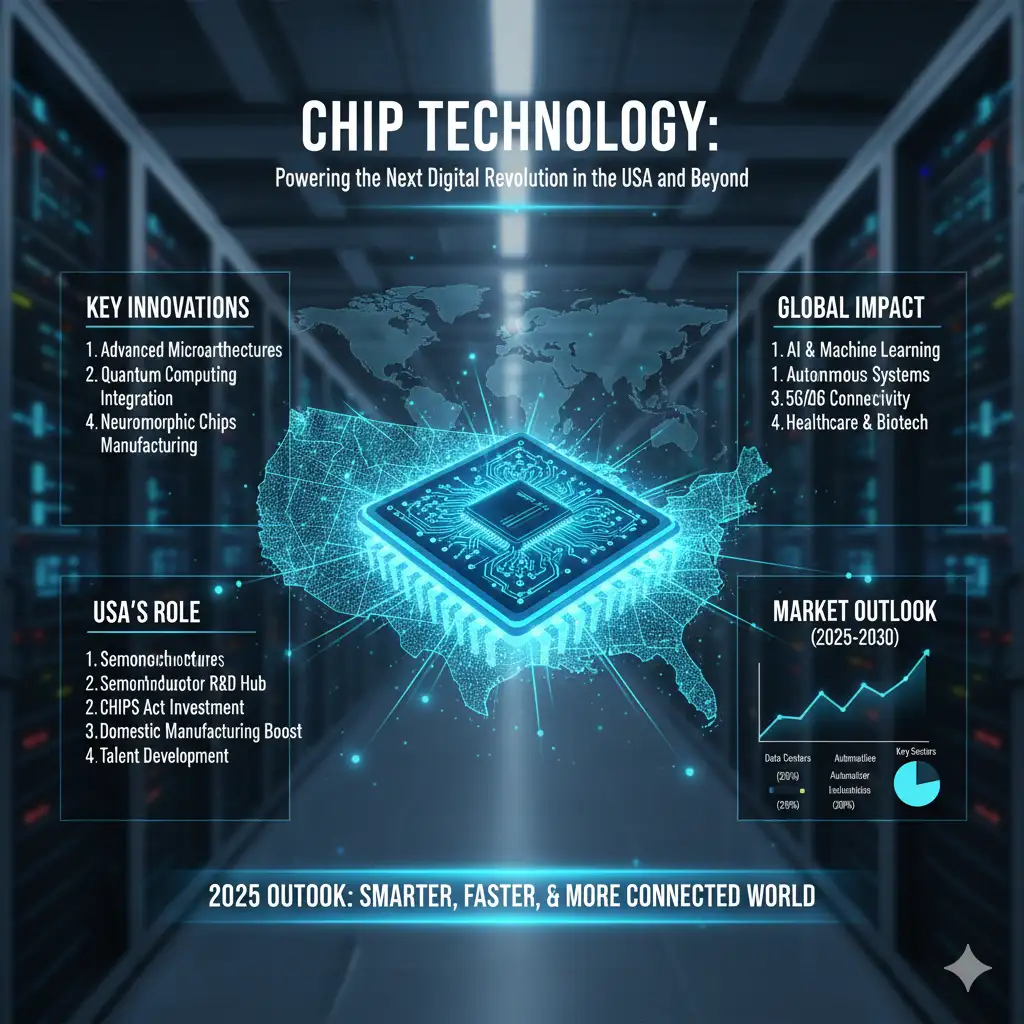

The USA’s Role in Global Chip Technology Leadership

The USA remains the undisputed global powerhouse in chip technology design. U.S. companies dominate the most valuable and complex parts of the semiconductor ecosystem:

- Chip Design: Companies like NVIDIA (in AI and graphics chips), AMD (in CPUs and GPUs), Qualcomm (in mobile processors), and Intel (in CPUs) set the global standard.

- Manufacturing Equipment: U.S. companies like Applied Materials and Lam Research produce the highly complex tools used to make chips.

- Software (EDA): The electronic design automation (EDA) software used to design all the world’s chips is made almost exclusively by U.S. firms.

However, the 2020 chip shortage exposed a critical vulnerability: while the U.S. designs most chips, over 90% of the most advanced manufacturing happens in Asia, primarily Taiwan.

This realization led to a landmark piece of policy: the CHIPS and Science Act of 2022. This U.S. law provides over $52 billion in subsidies to incentivize companies to build new semiconductor fabrication plants (“fabs”) on American soil. The CHIPS Act USA is a massive strategic investment in U.S. chip manufacturing, designed to secure the supply chain, create jobs, and ensure the next generation of chip technology is “Made in America.”

Inside the Semiconductor Manufacturing Process

Creating a modern microchip is arguably the most complex manufacturing process ever devised by humankind. It’s a symphony of physics, chemistry, and engineering that takes months and costs billions.

- Design: Engineers in the USA design the chip’s intricate, multi-layered circuit pattern using sophisticated EDA software. This blueprint is the “intellectual property.”

- Wafer Fabrication: The process begins with a large, ultra-pure silicon wafer. This all happens in a “cleanroom,” a facility thousands of times cleaner than an operating room, as a single speck of dust can ruin a chip.

- Photolithography: This is the heart of semiconductor fabrication. The wafer is coated with a light-sensitive material. A machine (primarily made by Dutch company ASML) uses extreme ultraviolet (EUV) light to project the chip’s pattern onto the wafer, like a high-tech stencil.

- Etching & Doping: The patterned areas are then etched away with chemicals, and other materials are “doped” into the silicon to change its electrical properties, building the transistors layer by layer. This process is repeated hundreds of times.

- Testing & Packaging: The completed wafer, now containing hundreds of individual chips, is tested. Defective chips are marked, and the good ones are cut from the wafer and packaged into the black “chips” we recognize.

This incredibly complex process is why new chip production USA facilities, like those being built by Intel in Ohio and TSMC in Arizona, are multi-billion dollar megaprojects.

Chip Technology and Artificial Intelligence (AI)

The single biggest driver of chip technology innovation today is the AI revolution.

Traditional chips (CPUs) are great at “serial” tasks—doing one calculation at a time, very quickly. But AI models, like the ones that power ChatGPT or create AI art, require “parallel” processing—doing thousands of simple calculations simultaneously.

This is where the Graphics Processing Unit (GPU) comes in. NVIDIA, a U.S. chip industry leader, realized its GPUs, originally designed to render graphics in video games, were perfectly suited for the math behind AI. This discovery ignited the AI boom.

Today, AI chips USA-based companies design are the most sought-after hardware in the world.

- GPUs: NVIDIA’s A100 and H100 GPUs are the gold standard for training large AI models.

- TPUs (Tensor Processing Units): Google designs its own custom chip technology, called TPUs, to efficiently run its AI services.

- NPUs (Neural Processing Units): These are specialized AI chips or “accelerators” built into next-gen processors from Apple (the Neural Engine), Qualcomm, and Intel. They are designed to run AI tasks on your device (like your phone or laptop) quickly and efficiently.

This AI hardware innovation is the engine of the entire AI economy, and a core focus of modern chip technology.

Edge Computing and Chip Technology

The AI boom created a secondary boom: edge computing. It’s not always practical to send data to a massive cloud data center to be processed. A self-driving car, a smart security camera, or a factory robot needs to make decisions in milliseconds.

This requires “AI at the edge.” This, in turn, requires a new class of edge computing chips. This chip technology must be:

- Low-Power: It often runs on a battery (like in your smartphone or an IoT chip).

- Efficient: It must perform complex AI tasks without overheating.

- Small: It needs to fit inside small smart devices hardware.

Companies like Apple (with its M-series and A-series chips) and Qualcomm (with its Snapdragon chips) are leaders in this edge computing USA market. They are packing powerful AI “neural engines” into the chip technology that powers our daily lives, from consumer electronics USA to advanced medical devices.

Quantum Chips: The Future Frontier

If AI chips are the present, quantum computing hardware is the future. This is a fundamentally different type of chip technology that moves beyond the 0s and 1s of classical computing.

- Classical Chips: Use “bits” (a 0 OR a 1).

- Quantum Chips: Use “qubits,” which can be a 0, a 1, or both at the same time (superposition).

This allows quantum computers to solve a small, specific set of problems that are impossible for any classical supercomputer, such as simulating new molecules for drug discovery, designing new materials, or (notoriously) breaking modern encryption.

The USA is a world leader in this field. U.S. chip manufacturing for quantum is highly experimental.

- IBM and Google Quantum AI are building quantum computing chips using superconducting circuits, which must be kept at temperatures colder than deep space.

- IonQ, a U.S. startup, uses “trapped ions” (electrically charged atoms) as its qubits.

This next-gen semiconductors field is still in its infancy, but it represents the next great frontier for chip technology.

Challenges in the Chip Technology Industry

Despite the incredible innovation, the chip technology industry faces immense challenges:

- Global Supply Chain Risk: The 2020-2022 chip shortage USA consumers and automakers faced was a brutal lesson. The world’s reliance on a few fabs in Taiwan—a geopolitical flashpoint—is a major economic and national security risk.

- Geopolitical Tensions: The USA-China “chip war” is a defining issue. The U.S. has implemented strict export controls to prevent advanced chip technology and manufacturing tools from reaching China’s military, creating a deep divide in the global tech ecosystem.

- Cost and Complexity: As noted, Moore’s Law is slowing because it’s becoming astronomically expensive. A single next-generation “fab” can cost over $20 billion, and R&D costs are soaring.

- Talent Shortage: The CHIPS Act is funding new fabs in the USA, but there’s a critical shortage of skilled semiconductor engineers, technicians, and Ph.D.s to run them.

Chip Technology and National Security

The challenges above lead directly to this point: chip technology is now a cornerstone of national security. A nation that cannot secure its own supply of next-gen processors cannot secure its future.

Modern defense systems are supercomputers. An F-35 fighter jet, an autonomous drone, a cybersecurity defense network, or an AI-powered satellite system runs on the most advanced chip technology available.

This is why the CHIPS Act is not just an economic bill; it’s a defense chips USA initiative. The Pentagon is partnering directly with U.S. chipmakers to create “secure enclaves” and trusted foundries on U.S. soil. This ensures that the secure semiconductors powering military-grade processors and critical infrastructure are safe from tampering and immune to foreign supply chain disruptions.

The Green Revolution: Sustainable Chip Technology

There’s an inconvenient truth about chip technology: manufacturing fabs are incredibly resource-intensive, using massive amounts of energy and water. Furthermore, the world’s data centers, powered by AI chips, consume 1-2% of all global electricity.

This has made sustainable semiconductors a top priority.

- Green Fabs: Companies like Intel and TSMC are investing billions in “green” fabs that run on renewable energy and recycle over 80% of their water.

- Eco-Friendly Chip Design: The biggest gains come from designing eco-friendly chips that are more power-efficient. This is the entire goal of edge computing chips and AI chips—to perform more calculations per watt of energy.

- Materials Innovation: Researchers are exploring new, more sustainable semiconductors and manufacturing processes that use less-toxic materials and reduce the carbon footprint.

This “green chip” revolution is not just good for the planet; it’s good for business, as energy efficiency is a primary selling point for chip technology in everything from data centers to smartphones.

The Future of Chip Technology: Predictions for 2030

The future of semiconductors USA-based companies are building will look vastly different from today. With Moore’s Law fading, innovation is exploding in new directions.

- 3D Chip Stacking (“Chiplets”): If you can’t make transistors smaller, you go vertical. 3D chip design involves “chiplets”—small, specialized chips—stacked on top of each other. This is the key to building next-gen processors that are more powerful and efficient.

- AI-Designed Chips: Engineers are now using AI to help design the next generation of AI chips. This is chip technology designing itself, accelerating innovation at an exponential rate.

- New Materials: We’re moving beyond silicon. Researchers are heavily invested in new materials like graphene, carbon nanotubes, and gallium nitride (GaN) that promise faster, more efficient performance.

- Specialization: The “one-size-fits-all” CPU is dying. The future is a “System-on-a-Chip” (SoC) with specialized processors for graphics (GPU), AI (NPU), communications, and more, all working in harmony.

The chip industry forecast for 2030 is one of hyper-specialization, 3D integration, and a chip technology landscape fully dominated by the needs of AI.

How Chip Technology Impacts Everyday Life

It’s easy to get lost in the high-tech jargon, but the impact of chip technology is deeply personal. It’s the invisible engine of your daily life.

- Your Smartphone: A pocket supercomputer powered by an advanced SoC.

- Your Car: A modern vehicle contains 1,000-3,000 chips, managing everything from the engine and brakes to the infotainment system and safety features.

- Your Home: Your Wi-Fi router, smart TV, smart speaker, and even your microwave run on smart devices chips.

- Your Health: Medical devices like pacemakers, MRI machines, and glucose monitors rely on sophisticated chip technology to save lives.

- Your Entertainment: Gaming consoles like the Xbox and PlayStation, and the massive data centers that stream Netflix and Spotify, are all powered by high-performance chips.

Every U.S. household benefits daily from the relentless advancement of chip technology, often without ever seeing the chip itself.

Conclusion: Why Chip Technology Is the Core of the Future

Chip technology is the fundamental building block of the 21st century. It’s the invisible backbone of our economy, our security, and our modern way of life. From the AI chips that are unlocking new forms of intelligence to the sustainable semiconductors that promise a greener future, this field is where the most important innovations are happening.

The USA is at a critical juncture, reinvesting heavily in U.S. chip manufacturing through the CHIPS Act to secure its supply chain and maintain its technological leadership. The race is on to build the next generation of chip technology that will power the quantum and AI revolutions.

As the world continues its rapid digital transformation, one thing is certain: the nations and companies that lead in chip technology will lead the future. The next decade of human progress will be defined by these tiny, intricate, and profoundly powerful marvels of engineering.

for read more blogs click here